BrainFM: A Foundation Model for Multi-modal Brain MRI Data with Applications to Few-shot Disease Detection

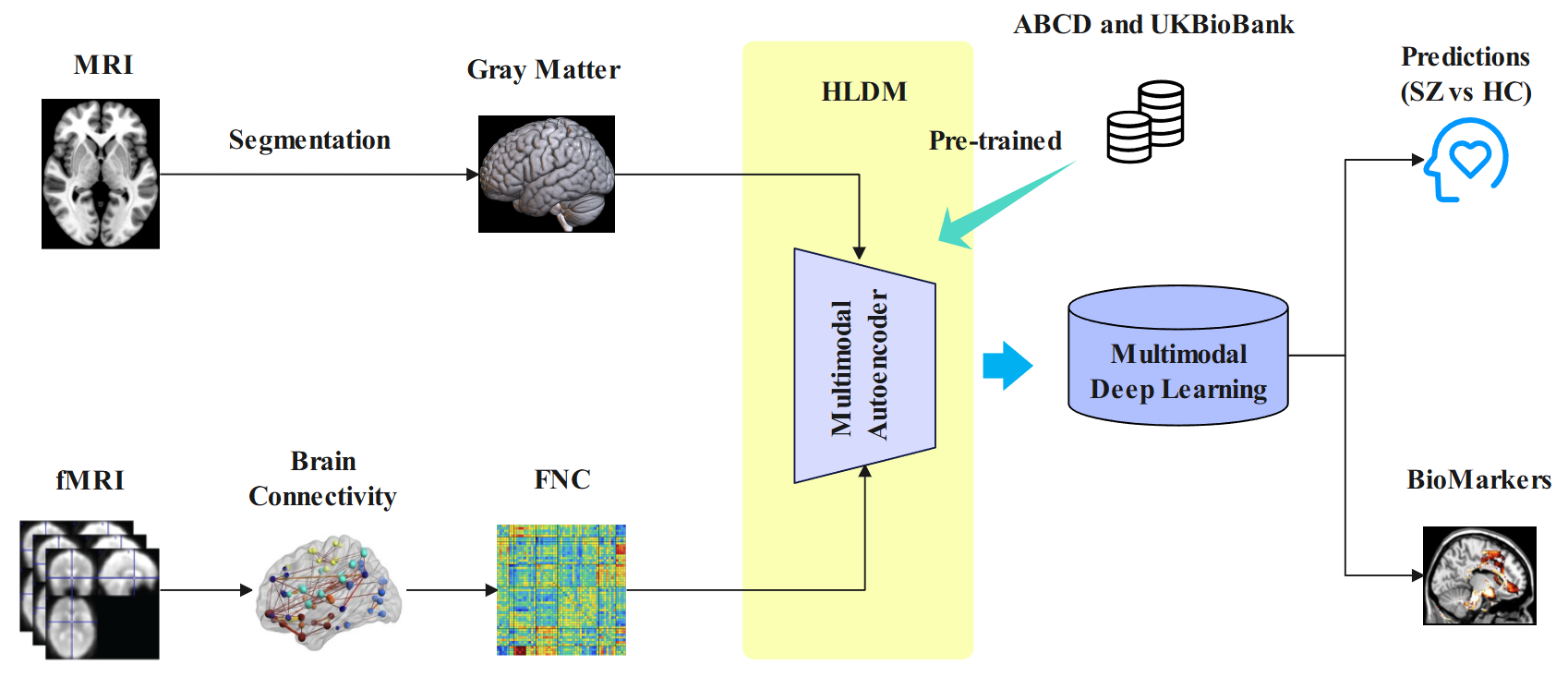

Multimodal foundational models hold considerable research potential in the medical field, particularly when applied to brain-related structural and functional data. In this study, we propose BrainFM, a foundational multimodal MRI model based on a composite variational auto-encoder (VAE) architecture. The model is trained using paired MRI and functional network connectivity (FNC) data from the ABCD dataset (N=12k) and part of the UK Biobank (N=43k).The model demonstrates remarkable performance in downstream tasks, including the schizophrenia detection task, where equal or superior accuracy is achieved using a mere 20\% of the data previously used for fine-tuning in related studies. Furthermore, we employ attention weights to visualize critical brain regions, facilitating the identification of potential areas associated with schizophrenia.

A Hybrid Latent Diffusion Model for Multimodal Fusion and Prediction from Neuroimaging Data

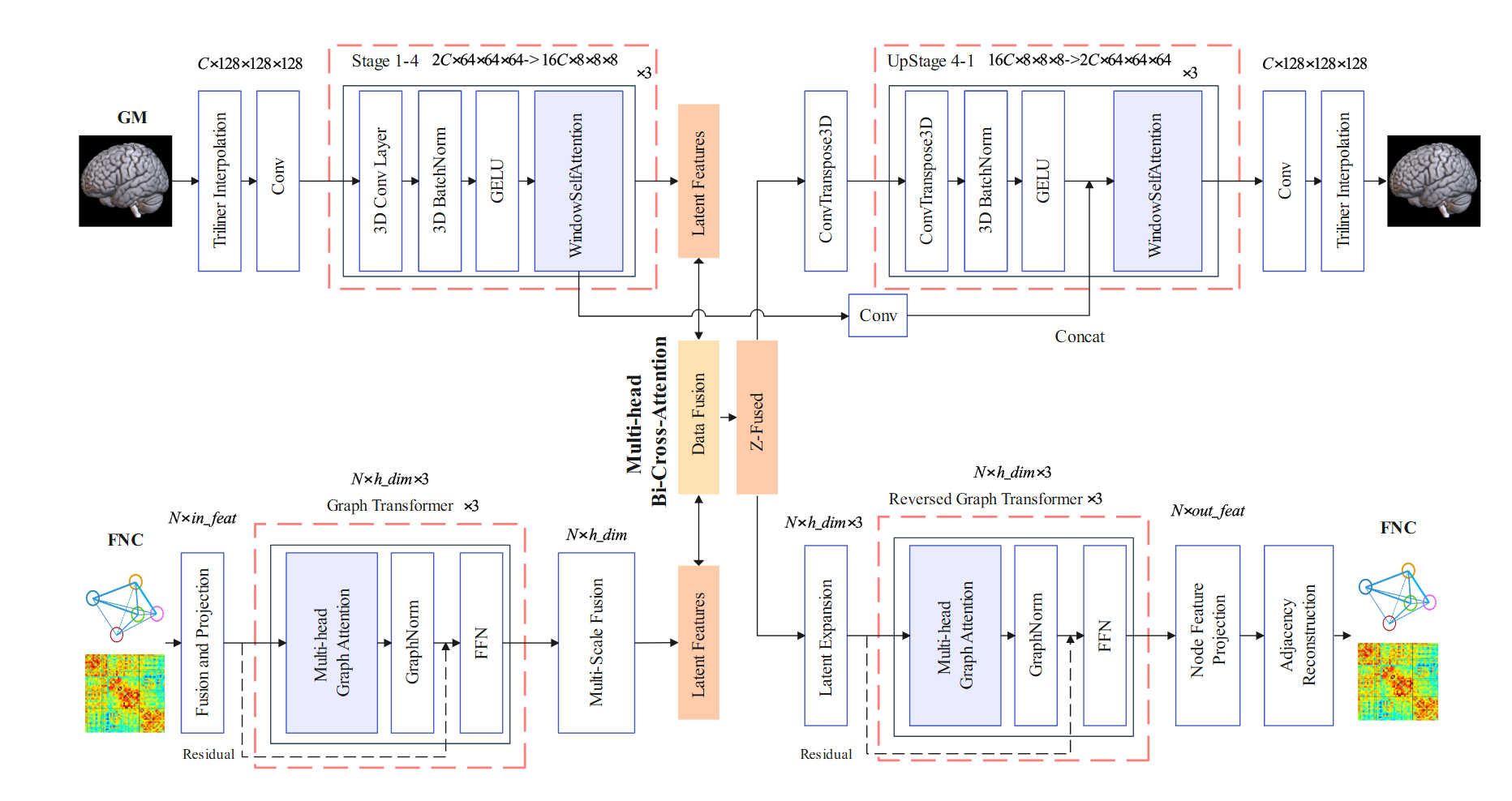

Neuroimaging generates high-dimensional structural and functional data, yet existing models often oversimplify or fail to capture their complex and nonlinear relationships. This study introduces an advanced artificial intelligence (AI) framework leveraging multimodal neuroimaging (structural and functional MRI data) from multiple sites to perform predictive neuroimaging for identifying brain-based biomarkers. We developed a hybrid latent diffusion model (HLDM), a novel data augmentation technique utilizing an autoencoder pre-trained on 53.2k samples to generate high-fidelity paired gray matter (GM) and functional network connectivity (FNC) data, enhancing model robustness. Our classification model, MultiViT2, employs modality-specific CNN-Transformer and graph transformer architectures, fused via a bi-cross-attention mechanism for robust disease prediction. Additionally, a multi-scale attention mapping technique, using gradients and attention weights, enhances interpretability by localizing disease-relevant brain regions and connections. Applied to schizophrenia–a disorder marked by intricate structural and functional brain changes–our approach achieved state-of-the-art performance (AUC 0.906), surpassing previous benchmarks (AUC 0.833). HLDM-driven augmentation significantly improved accuracy without additional real data, while multi-scale attention effectively reduced noise and identified critical biomarkers. Our study highlight the hippocampus, cerebellum, and insula, as of particularly relevance to the prediction of schizophrenia. In sum, our framework advances multimodal data integration, biomarker discovery, and interpretable AI for complex neuropsychiatric disorders.